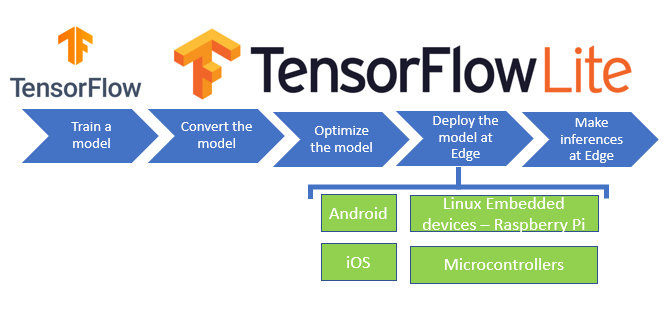

TensorFlow Lite is a set of tools that enables on-device machine learning by helping developers run their models on mobile, embedded, and edge devices.

Why TensorFlow Lite

\[dist = \sqrt {a^2 + b^2}\]where:

- a: feature 1

- b: feature 2, and so on

Machine Learning is everywhere. Every day we interact with Artificial Intelligence (AI) algorithms that range from the recommendation systems of applications such as YouTube or Netflix to the notifications we receive with promotions of new products.

Most of these machine learning models run on cloud servers. In this case, our devices (smartphones, smart TVs, smart watches, etc.) are nothing more than simple receivers of the information generated by them, which we receive by way of advertisements, notifications, recommendations, etc.

But there are many situations where this server-client schema doesn’t work, like:

- applications where this interaction between the device and the server can be a limitation that affects its operation:

- mobile application to help people with visual impairment to detect objects on the road

- An IoT sensors that must decide in real time if a particular measure needs to be stored or not

- apps where the privacy of the data is a strong requirement:

- bank apps

TensorFlow Lite solves exactly those issues, it simplifies performing machine learning on at-the-edge devices, rather than sending information to and from a server. This can lead to improvements of:

- Latency: no round-trip to a server

- Privacy: data does not leave the device

- Connectivity: Can work offline, so an Internet connection is not required

- Bandwith: No cost on network bandwidth

- Power consumption: network connections are power-hungry

You can imagine how powerfull is it, you can embed your ML models in any device.

In this post I am going to show you how to build a mobile app using flutter and Tensorflow lite step-by-step.

All the steps can be sumarized in the following image: