| YearsExperience | Salary | |

|---|---|---|

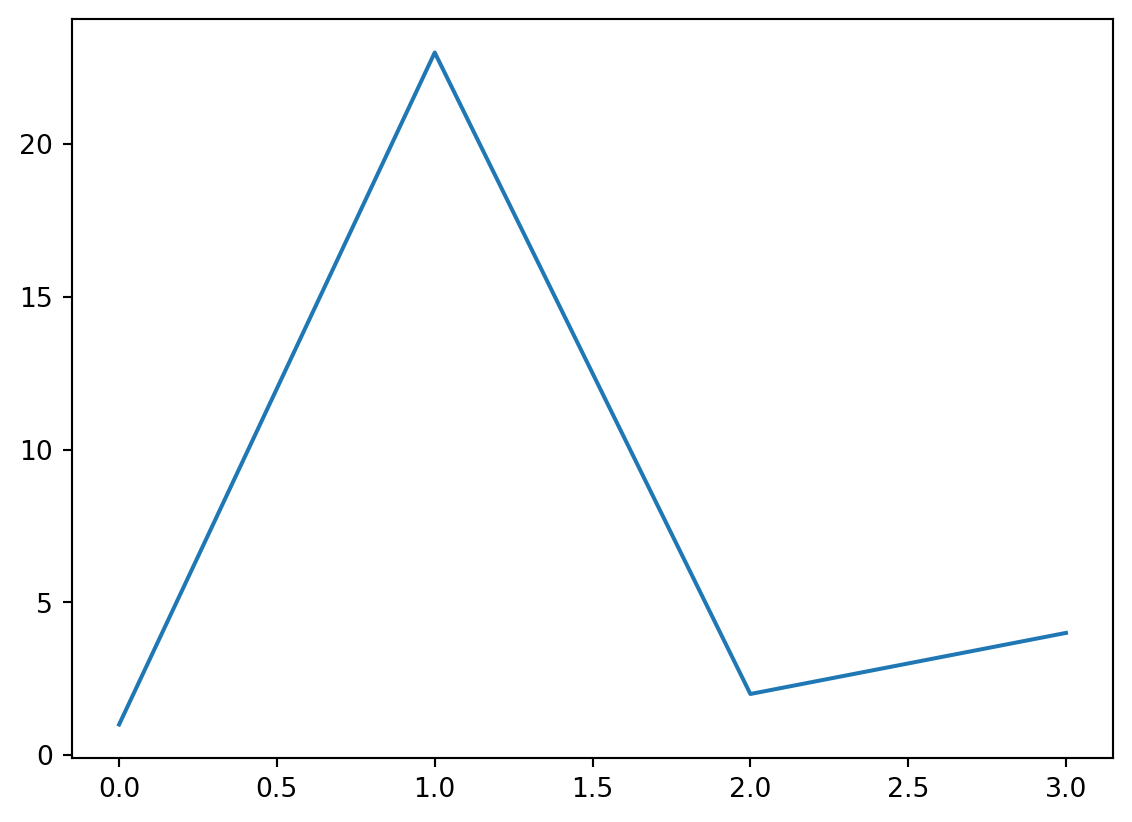

| 0 | 1.1 | 39343.0 |

| 1 | 1.3 | 46205.0 |

| 2 | 1.5 | 37731.0 |

| 3 | 2.0 | 43525.0 |

| 4 | 2.2 | 39891.0 |

3 Linear Regression

Linear regression is a statistical method for modeling the relationship between a dependent variable (also known as the response variable) and one or more independent variables (also known as predictor variables). The goal of linear regression is to find the line of best fit (i.e., the line that best represents the relationship between the variables) through a set of data points. The equation of the line of best fit is represented by a linear equation of the form \(y = mx + b\), where y is the dependent variable, x is the independent variable, m is the slope of the line, and b is the y-intercept. Linear regression can be used for both simple linear regression (one independent variable) and multiple linear regression (more than one independent variable).

\[Y_{i} = \beta_{0} + \beta_{1} \times X_{i}\]

\[\hat \beta_{1} = \frac{\sum_{i=1}^n (X_{i} - \bar X)(Y_{i} - \bar Y)}{\sum_{i=1}^n (X_{i} - \bar X)^2}\]

to estimate the intercept \(\beta_{0}\) we can use the following:

\[\hat \beta_{0} = \bar Y - \hat \beta_{1} \times \bar X\]

3.1 Learning by Examples

For this example, we will be using salary data from Kaggle. The data consists of two columns, years of experience and the corresponding salary. The data can be downloaded from here.

Code

import plotly.express as px

import plotly.io as pio

pio.renderers.default = "notebook+pdf"

pio.kaleido.scope.default_format = "png"

df = px.data.iris()

fig = px.scatter(df, x="sepal_width", y="sepal_length",

color="species",

marginal_y="violin", marginal_x="box",

trendline="ols", template="simple_white")

fig.show()